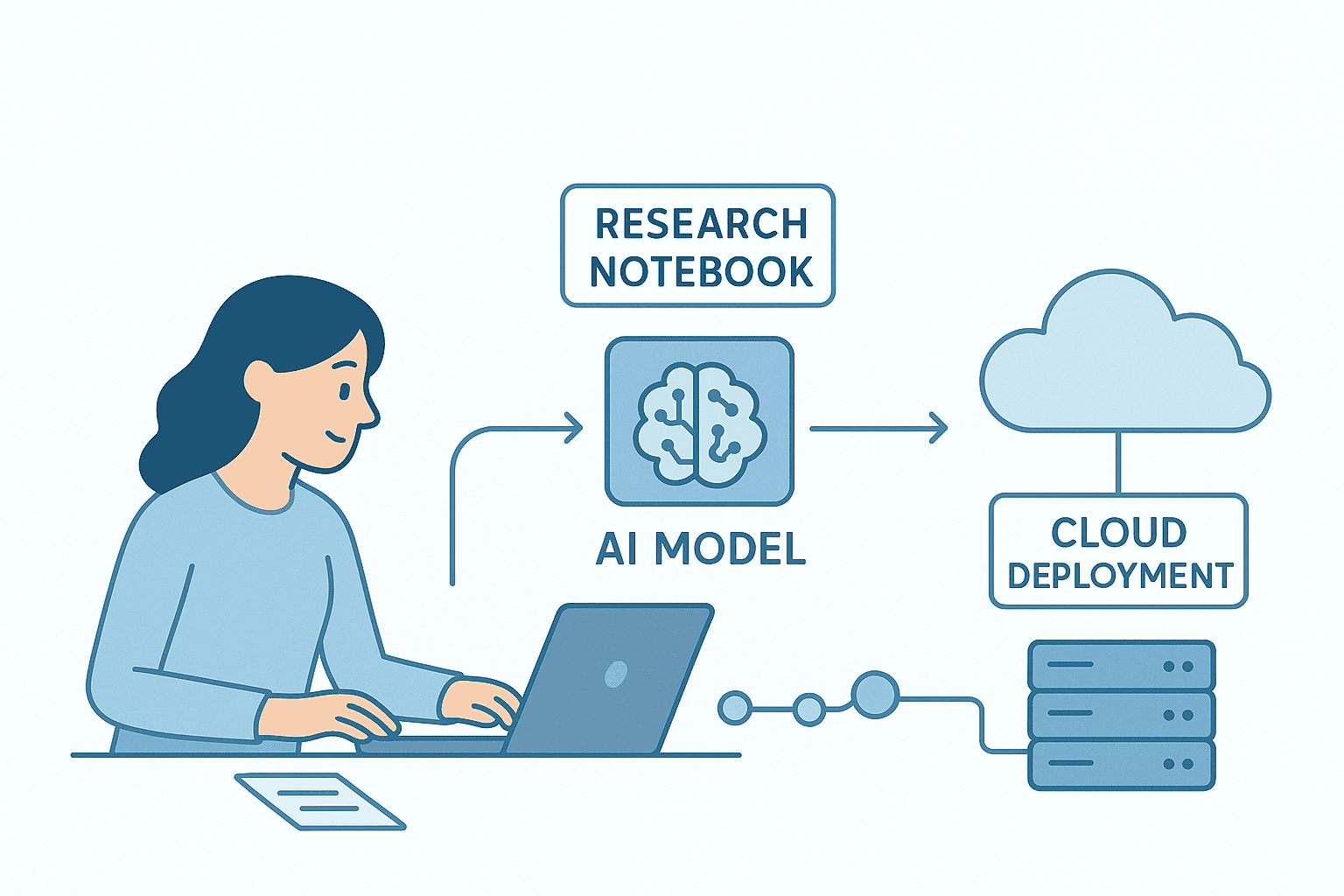

From Notebook to Production: The Real-World Path of an AI Model

Learn how to take your ML model from notebook to production with this practical MLOps guide — deployment, CI/CD, monitoring, and real-world examples for 2025.

MeetXpert Team

Most machine learning models never make it beyond the notebook.

They perform well in isolation but crumble under real-world data, scaling needs, or production reliability.

This post bridges that gap, walking you through how to turn your experimental notebook into a production-grade ML system that’s scalable, monitored, and maintainable.

1. Why Notebooks Don’t Cut It in Production

Notebooks are perfect for exploration, but production systems demand consistency, reproducibility, and observability.

Common issues include:

- Environment mismatch between dev and prod

- Hidden dependencies and random seeds

- No monitoring or rollback

- Manual retraining and deployment

A real-world ML system needs automation, version control, and CI/CD pipelines, not manual uploads.

2. Plan for Production from Day One

Before building your model, define your production needs clearly:

- Latency: Real-time or batch inference?

- Reliability: What uptime do you expect?

- Scalability: Can it handle traffic spikes?

- Reproducibility: Can you retrain it identically?

- Monitoring: How will you catch drift or errors?

💡 Pro tip: Think like an engineer, not just a researcher. Production starts with planning, not patching.

3. Data Pipelines & Validation

A solid pipeline ensures your model gets consistent, reliable data every time.

Example Python validation:

expect_column_values_to_not_be_null(df, "user_id")

expect_column_values_to_be_between(df, "age", 18, 99)Tools like Great Expectations and Tecton help enforce schema checks, detect drift, and keep data quality high.

4. Model Deployment in the Real World

Once your data is consistent, packaging and deploying your model is the next step.

Containerization ensures your model behaves the same on every machine — local or cloud.

Example Docker setup:

FROM python:3.11-slim

COPY . /app

WORKDIR /app

RUN pip install -r requirements.txt

CMD ["python", "serve_model.py"]You can deploy with FastAPI, BentoML, or SageMaker, and use Kubernetes for scalability and rollback.

5. Build an MLOps Pipeline that Works

Once your model runs reliably, automate everything around it.

That’s where MLOps - Machine Learning Operations - comes in.

It bridges the gap between development and production, letting you push updates safely without breaking things.

Your MLOps flow should include:

- Data validation

- Automated training and testing

- Model versioning

- CI/CD integration

- Continuous monitoring

Tools like MLflow, Kubeflow, and GitHub Actions can handle these workflows at scale.

6. Monitor What Matters

Once deployed, your model’s real test begins.

Models degrade silently — data drifts, user behavior changes, or new patterns emerge.

To stay ahead:

- Track input data drift

- Monitor accuracy and latency

- Set up alert systems for anomalies

- Automate retraining triggers

Pro tip: Use dashboards and observability tools like Evidently AI or WhyLabs to keep an eye on your models.

7. Avoid These Common Pitfalls

Even experienced ML engineers slip on these:

- ❌ Skipping environment consistency checks

- ❌ Forgetting dataset versioning

- ❌ Manual deployments without rollback

- ❌ No defined retraining cadence

💡 Rule of thumb: If it can’t be repeated automatically, it’s not production-ready.

8. From Model to Product Thinking

A production-ready model doesn’t just predict, it delivers consistent value.

Think beyond metrics like F1 score. Consider user experience, latency, maintainability, and feedback loops.

This mindset shift transforms a model into a real product that scales and evolves.

Wrapping Up

Moving from notebook to production isn’t a one-time task, it’s a culture.

It’s about versioning everything, automating what you can, and keeping humans in the loop.

If you’re building an AI product or startup and want guidance on ML deployment, MLOps best practices, or real-world model scaling connect with verified experts on MeetXpert.

Because every great AI project deserves a smooth path from notebook to production.